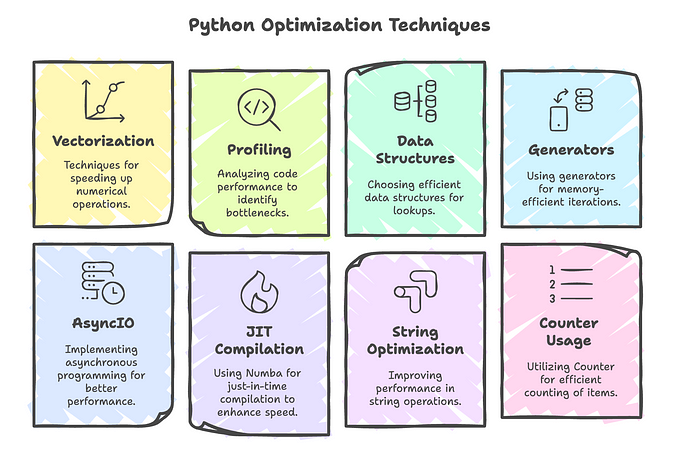

Managing memory and speed in Python can be a confusing and annoying task. Thankfully Python provides a great component in the library to help increase speed, while minimizing memory footprint. This is done through the use of Python Generators and through the Python command yield. In this blogpost, I will walk you through what the Generator functionality and yield command are and will show you an example of utilizing both.

What in the World is a Python Generator and What is this Yield Command?

In May 2001, Python Generators and the yield command were introduced with the PEP 255 release. Generators are a unique Python function, which returns a special kind of iterator, a lazy iterator. Lazy iterators can be defined as an iterator that is call when needed. In Python, these lazy iterators are similar to Lists since you are able to loop through them, but these lazy iterators do not store the iterator contents in memory, which is a big game changer in minimizing memory-use. An example of a basic generator can be seen below:

def generate_a_generator():

for i in range(10):

yield ia_generator = generate_a_generator()for x in a_generator:

print(x)

As you can see, a generator’s only real visual difference is that it uses the yield command instead of return and yields/returns one element at a time.

Example of Utilizing Yield and Generators

Imagine that you need to return a giant API response, while writing it to a JSON Newline Delimited GZIP file, and you are limited to x amount of memory. How would you go about this? If you said utilize a Generator and the yield command you are correct!

Here is an example of reading that giant CSV file without the yield command and without a Generator:

def fetch_from_api():

raw_data = list() for use_url in urls_list:

response = apiServer.get(url)

raw_data.extend(response.json()["data"])api_data = fetch_from_api()

with gzip.open("filename.json.gz", "w") as o_file:

for row in api_data:

o_file.write(json.dumps(row) + "\n").encode("utf-8"))

The above example will explode with memory usage if the API response is significant in size! Below is an example of utilizing the yield command and a Python Generator:

def fetch_from_api_generator():

for use_url in urls_list:

response = apiServer.get(url)

yield response.json()["data"]api_data_generator = fetch_from_api()

with gzip.open("filename.json.gz", "w") as o_file:

for batch in api_data_generator:

for row in batch:

o_file.write(json.dumps(row) + "\n").encode("utf-8"))

It may appear initially that the bottom example will be wronger since it is 2 For Loops, but this is saving memory, while maximizing speed. Specifically the API fetch function yields back each response’s data value, which is this iterated over by the gzip writing 2nd For Loop. This only returns one API fetch batch at a time, which saves memory compared to the first example, which returns all of the API fetch batches at once in one main list.

When to Not Use a Python Generator?

Never! Just kidding, there are definitely times where not using a Python Generator is the correct choice.

Below are some examples of times when a Generator might not be the best choice:

- you need to access the data more than once

Python Generators supply you with a single run through of the data; as a result, if you need to loop multiple times, a Generator might not be the best choice.

- You need random access to random indices in the Iterator

Python Generators do not allow you to index a specific index; as a result, you will not want to use a Generator if you need random access.

Conclusion

Thank you for taking the time to read this article and learn more about Python Generators and the Python yield command. I hope that this article will help kickstart you in utilizing these when the time is right. These are a great feature added by Python and will save plenty of memory for your programs, as well as speed up your code.